Fisher, Neyman & Pearson: Advocates for One-Sided Tests and Confidence Intervals

Author: Georgi Z. Georgiev, Published: Aug 6, 2018

Despite the bad press one-sided tests get these days, the fathers of modern statistical approaches endorsed them in one form or another and also used them often when providing examples in their writings. It is my opinion that this is at least partly due to the fact that all three were applied statisticians whose professional success depended on solving actual business and war-time problems, be it on a production line, crop research facility or artillery range.

Ronald Fisher and One-Sided Tests

Of the three, Fisher’s endorsement is least obvious, however it is nonetheless pretty clear and comes in three forms:

- Endorsement of the most sensitive test available for the task in multiple places in his major works like his 1925 "Statistical Methods for Research Workers" [1] and the 1935 "The Design Of Experiments" [2].

- Multiple examples of one-sided tests throughout his major works and lectures.

- Statements about one-sided significance calculations

The first form is important since the one-sided test is the most sensitive (most powerful) one available for a one-sided hypothesis, thus it should be preferred, when it is applicable to the question at hand. The examples, many of them coming from his own work as a researcher and statistician, show that he was keen on using them in practice.

Examples of Fisher using one-sided tests

Fisher’s most famous one-sided test example is the tea tasting experiment. In it the null hypothesis is that the lady cannot discriminate whether milk or tea was first added to the cup, while the alternative is that she can. Obviously, only high proportion of correct identification of cups would lead us to reject the null, so if there was a high proportion of incorrect identification of cups we would not count that as evidence for her abilities, no matter how extremely unlikely the outcome. High here should be understood relative to pure guessing/chance.

However, later Fisher states the hypothesis differently: "that the judgements given are in no way influenced by the order in which the ingredients have been added". This is clearly a two-sided hypothesis, as conveyed by the word "in no way". A one-sided hypothesis would have been that the judgements given were not positively influenced by the order in which the ingredients have been added, that is, that the lady is not able to positively discriminate between the cups better than chance. Upon observing a higher than average failure to determine the order of ingredients we may stipulate that the lady’s judgements are in fact influenced inversely.

We see another example of Fisher using one-sided test when discussing potential evidence for a genetic component to criminality by comparing the criminal records of monozygotic versus dizygotic like-sex twins: "Statistical Methods for Research Workers" (p.98-101 of the 5-th edition). In the opening paragraph a one-sided hypothesis is specified: "Do Lange's data show that criminality is significantly more frequent among the monozygotic twins of criminals than among the dizygotic twins of criminals?" (emphasis mine). Fisher first computes the χ2 statistic and declares "The probability of exceeding such a deviation in the right direction is about I in 6500" (emphasis mine), clearly speaking about rejecting a left-sided null.

He then presents more precise calculations that are from a one-sided Fisher’s Exact test. However, when presenting the results, we see the statement "This amounts to 619/1330665, or about 1 in 2150, showing that if the hypothesis of proportionality were true, observations of the kind recorded would be highly exceptional." which corresponds to rejecting a two-sided hypothesis.

We see the same example presented in the paper "The Logic of Inductive Inference" [3] read in front of the Royal Statistical Society on Dec 18, 1934. On page 48-50 he presents calculations corresponding to a one-sided Fisher’s Exact test and arrives at the same probability of ~0.000465 (R code), stating: "The significance of the observed departure from proportionality is therefore exactly tested by observing that a discrepancy from proportionality as great or greater than the observed, will arise, subject to the conditions specified by the ancillary information, in exactly 3,095 trials out of 6,653,325, or approximately once in 2,150 trials." which translated to the research hypothesis amounts to the rejection of the null hypothesis that "the causes leading to conviction had been the same in the two classes of twins".

In this second paper he makes no mention of a directional alternative or null hypothesis, despite the calculations being for a directional alternative hypothesis. It turns out, Fisher makes claims that correspond to the rejection of a point null (thus accepting a two-sided alternative) based on a one-sided probability.

Was Fisher sloppy in his research or in his writing? I do not have that impression from reading his works and we clearly see that he uses a two-tailed test when the alternative hypothesis is two-sided (point null), for example in the "Experiment on Growth Rate" chapter the p-value derived from the Student’s T-test is a two-tailed one. Based on this, my guess is that the ambiguity might be due to the fact that his main focus is the mathematical apparatus, which is based on calculations for the worst-case of the less frequent or equal proportions hypothesis, which is that the proportions were equal. I guess it is easy to confuse that with the fact that the research null hypothesis is that of no difference, when in fact it was clearly stated as a one-sided one in the opening paragraph.

Guidelines for calculating probabilities for one-sided hypotheses

Fisher clearly realized the need for considering one-sided hypotheses, as evident by this statement in "Statistical Methods for Research Workers" related to usage of t-tables (page 118 (5-th edition) as a part of the chapter about difference in means):

"if it is proposed to consider the chance of exceeding the given values of t, in a positive (or negative) direction only, then the values of P should be halved." wherein "P" refers to "the probability of falling outside of the range ±t, equivalent to a p-value, while t is a value from a T-distribution.

according to Fisher when we want to consider the chance of exceeding a given absolute critical value |t| in a specific direction (-t or +t), the p-value corresponding to a given t-score should be halved to account for the broader question at hand which means that a narrower set of the possible results can answer it in the positive. The same observed t-value results in a lesser probability of observation under a directional null, therefore, to maintain a desired level of significance with a directional hypothesis one needs to adjust the critical score in the direction of the null hypothesis.

While this is a very clear statement about the acceptability of one-sided hypotheses and clear guideline on how to calculate probabilities related to them, practitioners might have easily missed this remark in the book and this might have contributed to the unwarranted wide adoption of two-sided significance calculations instead of one-sided ones.

Fisher replying to questions on one-sided vs two-sided tests

Finally, I was able to find in Lehmann (2012) [4] a mention of a letter from Fisher to Hick in response to a question about one-tail and two-tail chi square tests writes that according to him this is for the researcher to decide ("In these circumstances the experimenter does know what observation it is that attracts his attention.") and that: "This question can, of course, and for refinement of thought should, be framed as: Is this particular hypothesis overthrown, and if so at what level of significance, by this particular body of observations?".

In my interpretation this means that if a researcher is interested in checking if he can overthrow a hypothesis of "negative or zero difference" between the measurements of interest, then he should do so. In saying "this particular hypothesis", referring to the null hypothesis, Fisher makes it clear that there are infinitely many possible null hypothesis, making clear the distinction between a null hypothesis and a nil hypothesis.

He also makes it clear that he understands that the tailedness of the distribution (Chi-Square is one-tailed) has nothing to do with the tailedness of the hypothesis, otherwise his response would have simply been that Chi-Square is a one-tailed distribution and the question does not make sense.

Jersey Neyman on One-Sided Confidence Intervals

In his 1937 "Outline of a Theory of Statistical Estimation Based on the Classical Theory of Probability" (Neyman, 1937) [6] J.Neyman makes a clear statement about the acceptability and desirability of using one-sided confidence intervals, which, as we know, correspond to one-sided tests of significance. This statement is in the form of a whole sub-chapter of the paper titled "One-sided Estimation" in which it is stated:

"The application of these regions of acceptance having the above properties is useful in problems which may be called those of one-sided estimation."

Neyman goes on: "In frequent practical cases we are interested only in one limit which the value of the estimated parameter cannot exceed in one or in the other direction.". Here the word "cannot exceed" has been interpreted by some (Lombardi & Hurlbert, 2009) [7] in a rather direct way, not taking into account the examples that he provides immediately after:

"When analyzing seeds we ask for the minimum per cent. of germinating grains which it is possible to guarantee. When testing a new variety of cereals we are again interested in the minimum of gain in yield over the established standard which it is likely to give. In sampling manufactured products, the consumer will be interested to know the upper limit of the percentage defective which a given batch contains. Finally, in certain actuarial problems, we may be interested in the upper limit of mortality rate of a certain society group for which only a limited body of data is available." (emphasis mine)

The phrase "interested" has caused confusion among practitioners, though unnecessarily, since it is obvious that "interest" and "question we ask of the data" are used synonyms ("ask for", followed by "again interested"). It is obvious from these examples that the phrase "cannot exceed" from the previous statement should rather be interpreted not as "numerically or practically cannot exceed in a given direction" but as "them exceeding in the given direction is not a positive answer to our question".

For if we were to take these literally, then we should also stipulate that we cannot be interested in the maximum percent of germinating seeds, or that the new variety of cereals cannot be performing worse than the established standard, or that a quality assurance engineer cannot be interested in the lower band of percentage defective units in a batch, or that a health professional cannot be interested in the lower limit of the mortality rate of a given group. This would be absurd and given this contradiction I believe we should interpret "interested in" as "asking a question about" and similarly the word "cannot" in the manner in which it was intended: in the context of the null hypothesis of interest.

Neyman could not have been clearer about it: "In all these cases we are interested in the value of one parameter, say, θ1, and it is desired to determine only one estimate of the same, either θ (E) or ̿ θ (E), which we shall call the unique lower and the unique upper estimate respectively. If θ1 is the percentage of germinating seeds, we are interested in its lower estimate θ (E) so as to be able to state that θ (E) ≤ θ1, while the estimation of the upper bound 0 (E) is of much less importance. On the other hand, if it is the question of the upper limit of mortality rate, θ2, then we desire to make statements as to its value in the form θ2 ≤ ̿ θ (E), etc."

Thus, my conclusion is that an "interest" is clearly nothing more than "a question" and there is nothing wrong in asking the question "is X lower than a boundary which in Y% of such intervals will contain the true value". With regards to the ability of the true value to depart in any direction I believe the answer is more than clear. It is also painfully obvious that there is no prediction nor prior beliefs in any of the examples given and that no such wording is used by Neyman, confirming their irrelevance in the usage of one-sided confidence intervals. What is relevant is the question being asked and the answer in which we have immediate interest in.

Neyman & Pearson on One-Sided Hypotheses

In their major work "On the Problem of the most Efficient Tests of Statistical Hypotheses" (Neyman & Pearson, 1933) [5] Jersey Neyman and Egon Pearson give us a detailed example of their approach to one-sided and two-sided hypothesis testing. In subsection III-b "Illustrative Examples" they pose a two-sided question which they proceed to explore as a combination of the two possible one-sided hypotheses: that the mean of the sampled population is greater than a given value and that the mean of the sampled population is less than that value.

(A part of the 1932 “On the Problem of the most Efficient Tests of Statistical Hypotheses” paper by J.Neyman & E.Pearson)

(A part of the 1932 “On the Problem of the most Efficient Tests of Statistical Hypotheses” paper by J.Neyman & E.Pearson)

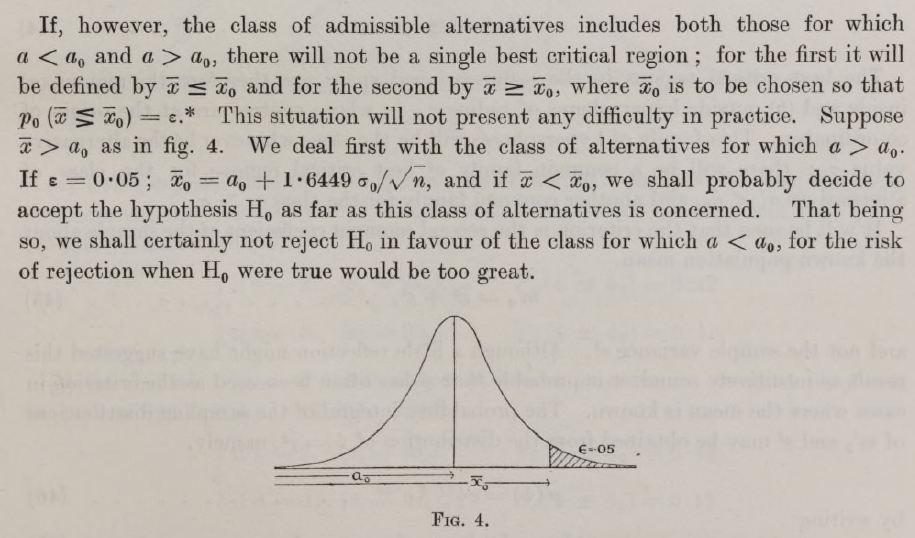

The two-sided case is converted to an approach in which each of the two one-sided hypotheses is examined, starting with the alternative hypothesis wherein the observed effect lies. First, for a significance level of α = 0.05 (ε = 0.05 in the original notation) Neyman & Pearson state a critical boundary of 1.6449 as the critical region for accepting the class of alternative hypotheses that cover the space in which the population mean is greater than the given value. They state that if a value falls within the region H0 should be rejected and H1 accepted and if it does not, then H0 should be accepted. Then they state that they should certainly not reject H0 in favor of the opposite alternative (of the population mean being less than the given value) due to the risk of rejection of a true H0 being "too great".

There is no treatment of a point null versus a composite alternative as is customary nowadays. The alternative space is disjointed and they do not consider it as a single alternative at all. One can take this as Neyman and Pearson refusing to consider a non-directional alternative hypothesis as a valid mode of post-hoc inquiry.

If my reading of this is correct, then this is an even more extreme endorsement of one-sided tests than one would expect. I think there is no issue in using a two-tailed test when it corresponds to a question one is interested in asking and then the appropriate critical regions for a UMP test should be those of α/2 on both sides of the rejection region.

R codes for Fisher's examples

These can be used to perform the Fisher Exact test with the data from the two examples cited in this article. Note that to get the same probabilities as Fisher you need to use the "alternative="greater"" option, meaning that you perform a one-sided calculation.

R code for Fisher's tea tasting experiment:

TeaTasting <- matrix(c(3, 1, 1, 3), nrow = 2, dimnames = list(Guess = c("Milk", "Tea"), Truth = c("Milk", "Tea")))

fisher.test(TeaTasting, alternative = "greater")

R code for Fisher's twin study on the genetics of criminality:

Convictions <- matrix(c(15, 3, 2, 10), nrow = 2, dimnames =list(c("Monozygotic", "Dizygotic"),c("Convicted", "Not convicted")))

fisher.test(Convictions, alternative = "greater")

References

[1] Fisher R.A. (1925) "Statistical methods for research workers". Oliver & Boyd, Edinburg

[2] Fisher R.A. (1935) "The design of experiments", Oliver & Boyd, Edinburgh

[3] Fisher R.A. (1935) "The Logic of Inductive Inference", Journal of the Royal Statistical Society 98(1):39-82

[4] Lehmann E.L. (2012) "The Fisher, Neyman-Pearson Theories of Testing Hypotheses: One Theory or Two?", Journal of the American Statistical Association 88(424):1242-1249; https://doi.org/10.1080/01621459.1993.10476404

[5] Neyman J., Pearson E.S. (1933) "On the Problem of the Most Efficient Tests of Statistical Hypotheses", Philosophical Transactions of the Royal Society of London A-231:289-337; https://doi.org/10.1098/rsta.1933.0009

[6] Neyman J. (1937) "Outline of a theory of statistical estimation based on the classical theory of probability", Philosophical Transactions of the Royal Society of London, A-236:333-380; https://doi.org/10.1098/rsta.1937.0005

[7] Lombardi C.M., Hurlbert S.H. (2009) "Misrepresentation and misuse of one-tailed tests", Austral Ecology 34-4:447-468; https://doi.org/10.1111/j.1442-9993.2009.01946.x

Enjoyed this article? Please, consider sharing it where it will be appreciated!

Cite this article:

If you'd like to cite this online article you can use the following citation:

Georgiev G.Z., "Fisher, Neyman & Pearson: Advocates for One-Sided Tests and Confidence Intervals", [online] Available at: https://www.onesided.org/articles/fisher-neyman-pearson-advocates-one-sided-tests-confidence-intervals.php URL [Accessed Date: 22 Feb, 2026].

Georgi Z. Georgiev is an applied statistician with background in web analytics and online controlled experiments, building statistical software and writing articles and papers on statistical inference. Author of the book

Georgi Z. Georgiev is an applied statistician with background in web analytics and online controlled experiments, building statistical software and writing articles and papers on statistical inference. Author of the book